By now we have quite a visually-functional app, but there’s still a lot more that needs to be done before we can even consider it a beta let alone a final release. Aside from actually needing to record the user’s voice, we need to learn how to show a second View (or screen) where the user will be able to modify the audio file with the four effects we have planned.

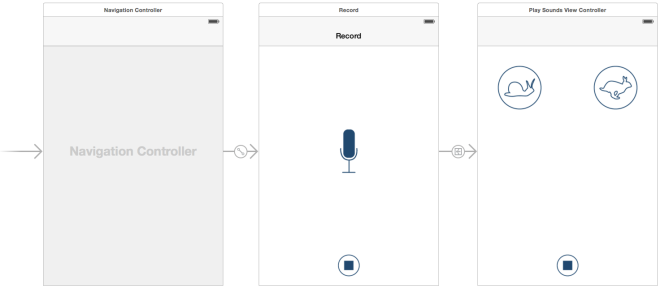

We’re introduced to the Navigation Controller class, which we drag onto our storyboard. Not much is really known about it yet, other than that it’s responsible for allowing the transition between multiple Views. We’ve added a second View, and now when you press the stop button, you are taken to that View. We learn how you can control both the first View, upon leaving it, and the second View, upon loading it, with several core methods for whether the View will load; did load; will appear; did appear; will disappear; did disappear; and will unload; did unload.

Skipping forward a bit, this View now contains three buttons: an image of a Snail to slow down the audio, an image of a Rabbit to speed up the audio, and another stop button.

Since I already outlined the purpose of this application, you probably already realize what those two image buttons are for. We’re introduced to the AVFoundation Framework. Within this framework are various classes for handling audio/video interaction, but in this app all we care about is audio. We define a new variable of type AVAudioPlayer (a class within AVFoundation) which will act as our internal audio player. Now, when the two buttons are pressed, the audio file (which we previously loaded in, after learning how to add resources to our project and retrieve their path within the bundle) is played. The difference between the two buttons is the rate at which the audio is played: this is achieved by setting the appropriately-named object property, rate, to either 0.5 for slow or 1.5 for fast (with 1 being default speed).

In closing, we now have a functional app which allows a user to press the microphone button to begin “recording,” a stop button which halts the “recording” and takes the user to the next view, a couple of buttons to play an audio file at different speeds, and a final stop button to stop the audio if it’s currently playing. To return to the previous view, the user simply presses the back button at the top left, which is automatically created and managed by the Navigation Controller.

While I do not know for sure what Part 3 will entail, I can only presume we’ll now be recording the user’s voice, saving it, and manipulating that audio file instead of the temporary Forrest Gump clip we were provided.